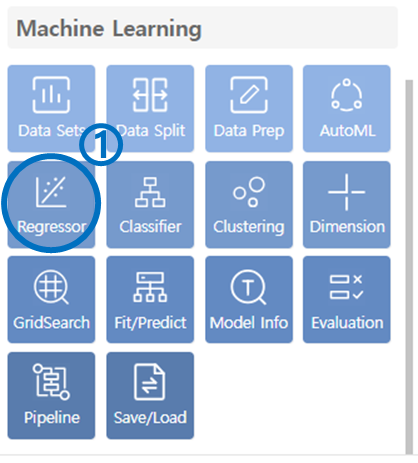

5. Regressor

Click on the Regressor in the Machine Learning category.

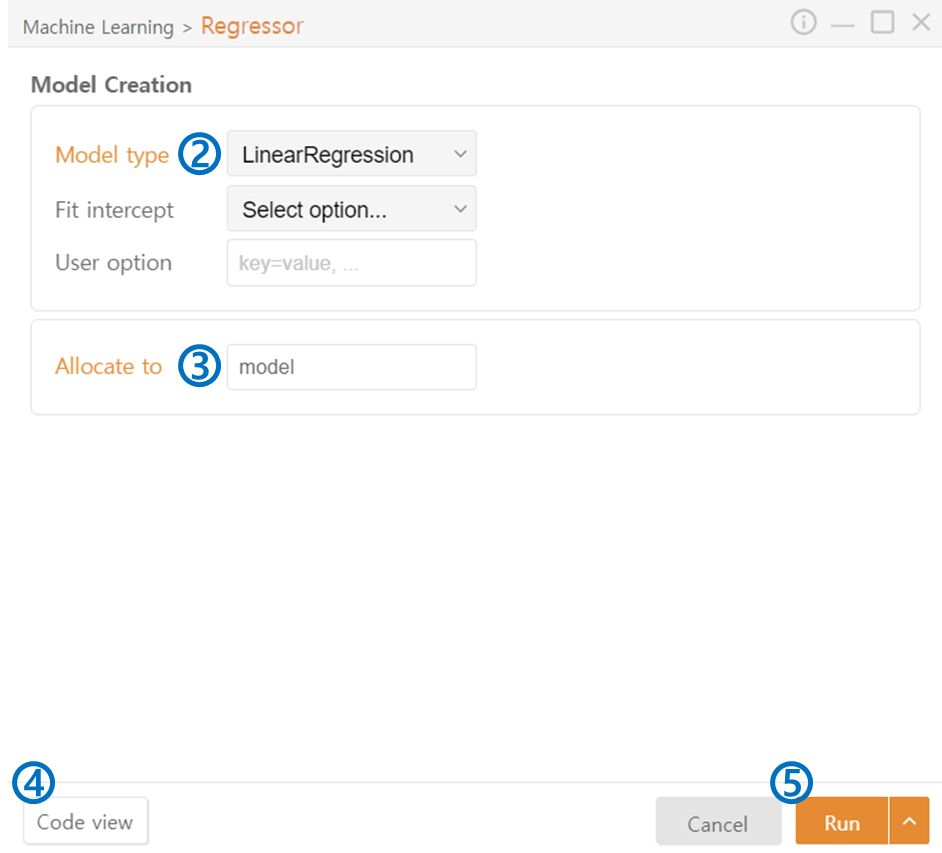

Model Type: Choose the regression model.

Allocate to: Enter the variable name to assign to the created machine learning model.

Code View: Preview the generated code.

Run: Execute the code.

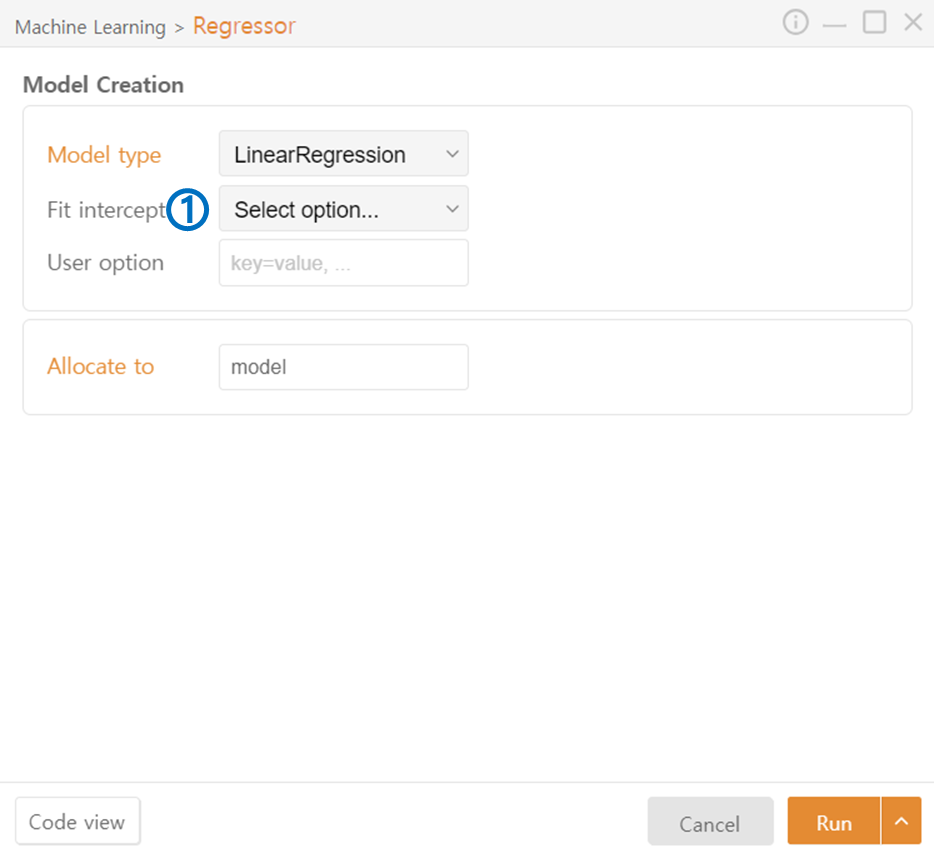

Linear Regression

Fit Intercept: Choose whether to include the intercept.

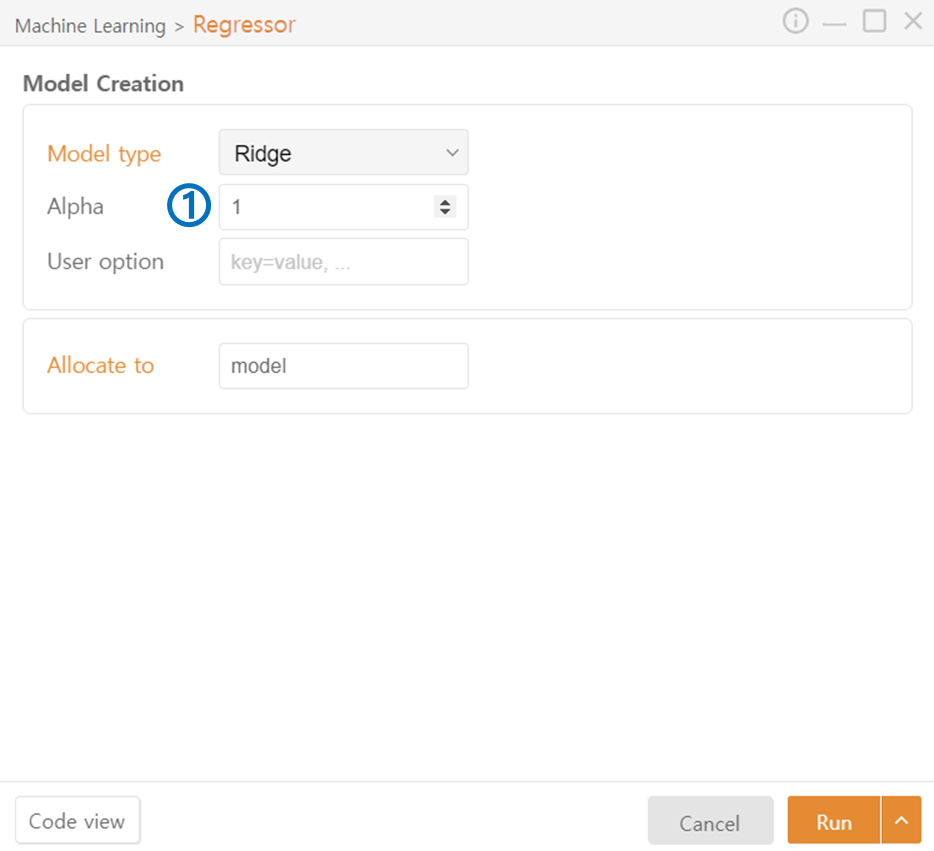

Ridge / Lasso

Alpha: Adjust the level of regularization.

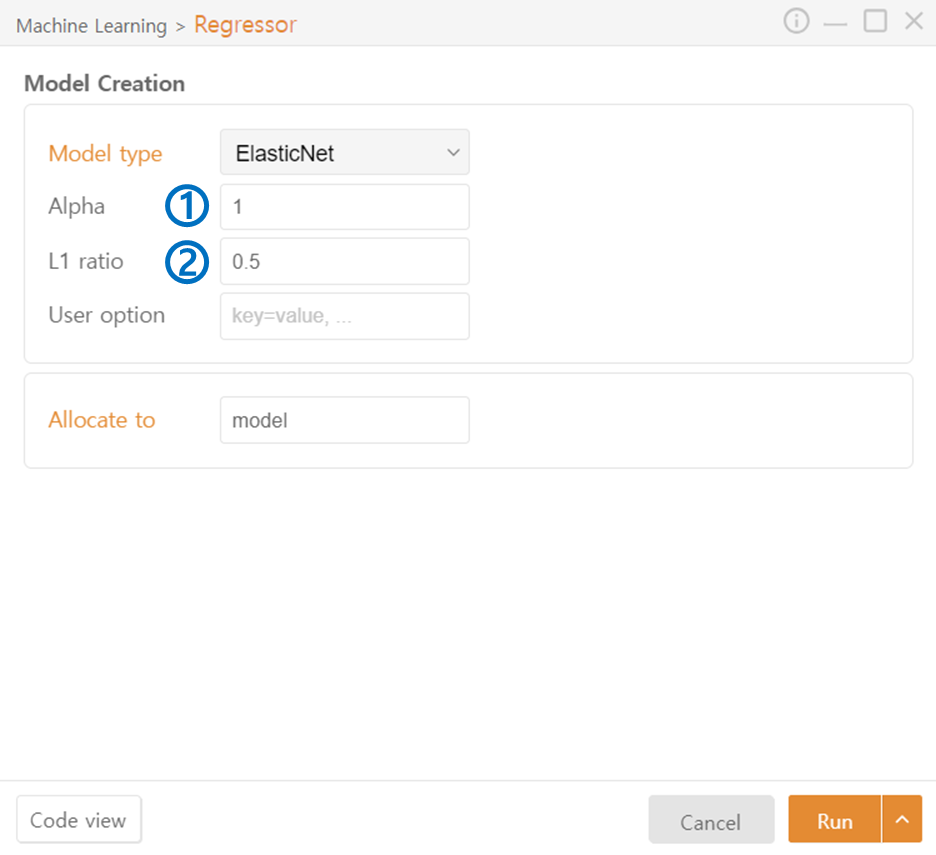

ElasticNet

Alpha: Adjust the level of regularization.

L1 ratio: Adjusts the balance (ratio) between L1 (Lasso) and L2 (Ridge) regularization.

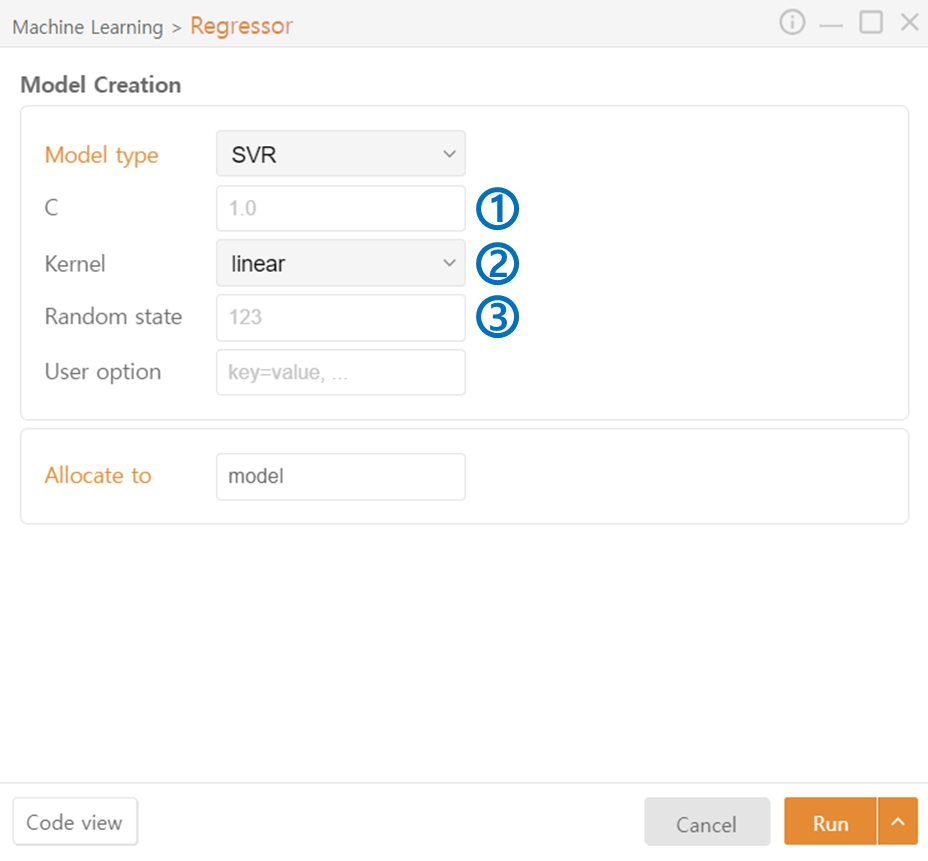

SVR(SupportVectorMachine Regressor)

C: Represents the degree of freedom for model regularization. Higher values of C make the model more complex, fitting the training data more closely.

Kernel: Function mapping data to a higher-dimensional space, controlling model complexity.

Degree(Poly): Determines the degree of polynomial.

Gamma(Poly, rbf, sigmoid): Adjusts the curvature of the decision boundary.

Coef0(Poly, sigmoid): Additional parameter for the kernel, controlling the offset. Higher values fit the training data more closely.

Random state: Sets the seed value for the random number generator used in model training.

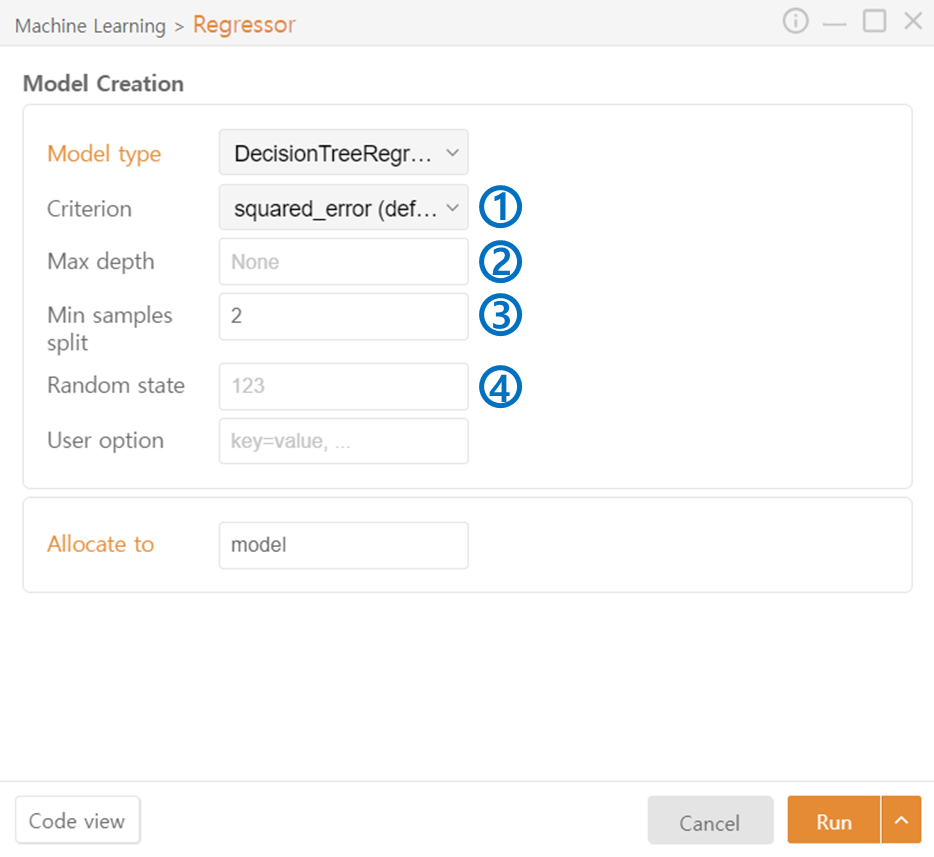

DecisionTree Regressor

Criterion: Specifies the measure used for node splitting.

Max depth: Specifies the maximum depth of the tree.

Min Samples Split: Specifies the minimum number of samples required to split a node.

Random state: Sets the seed value for the random number generator used in model training.

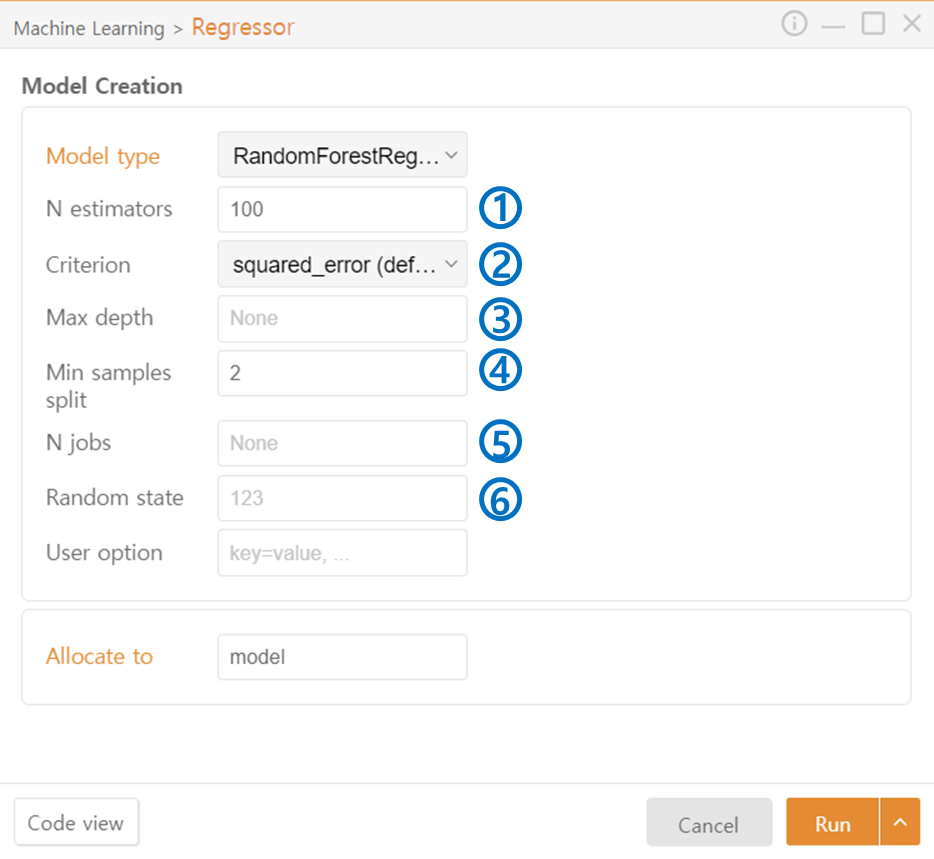

RandomForest Regressor

N estimators: Specifies the number of trees in the ensemble.

Criterion: Specifies the measure used for node splitting.

Max depth: Specifies the maximum depth of the tree.

Min Samples Split: Specifies the minimum number of samples required to split a node.

N jobs: Specifies the number of CPU cores or threads to be used during model training.

Random State: Sets the seed value for the random number generator used in model training.

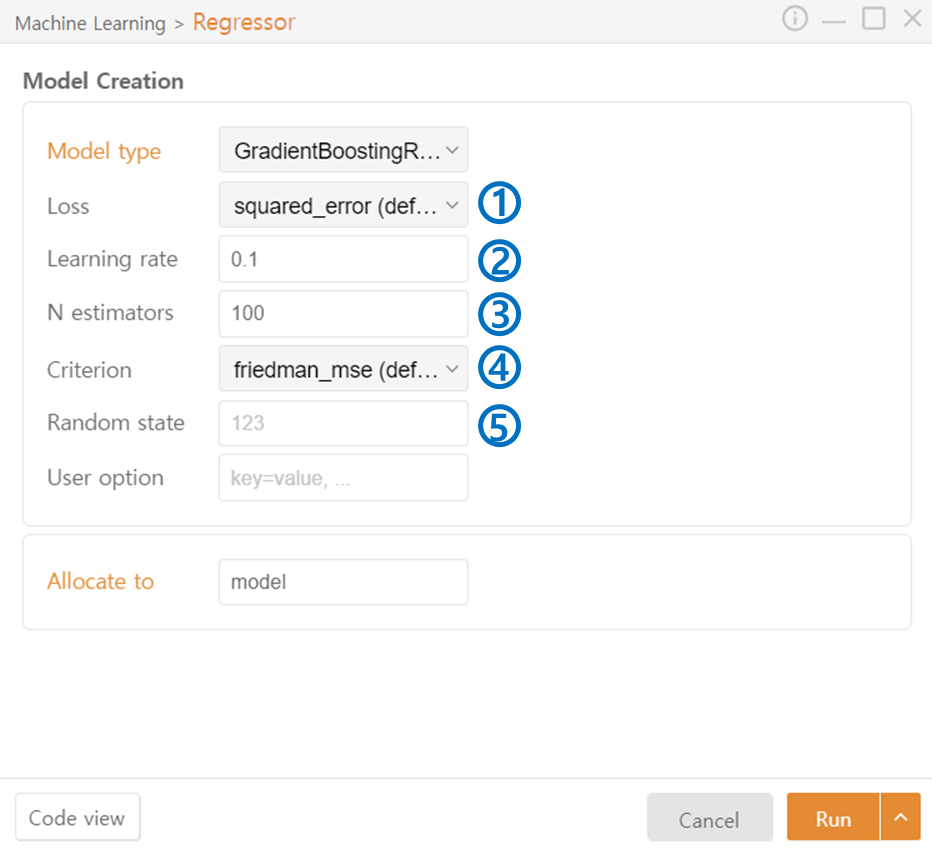

GradientBoosting Regressor

Loss: Specifies the loss function used.

Learning rate: Specifies the learning rate.

N estimators: Specifies the number of trees in the ensemble.

Criterion: Specifies the measure used for node splitting.

Random State: Sets the seed value for the random number generator used in model training.

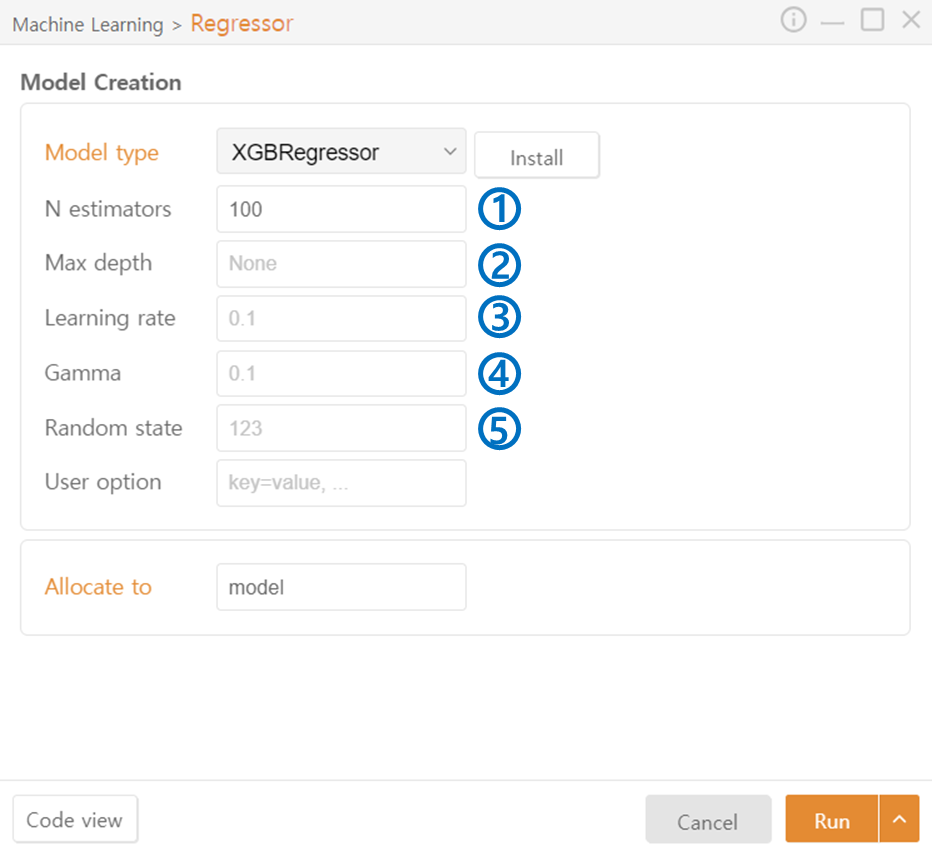

XGB Regressor

N estimators: Specifies the number of trees in the ensemble.

Max depth: Specifies the maximum depth of the tree.

Learning rate: Specifies the learning rate.

Gamma: Specifies the minimum loss reduction required to make a further partition.

Random State: Sets the seed value for the random number generator used in model training.

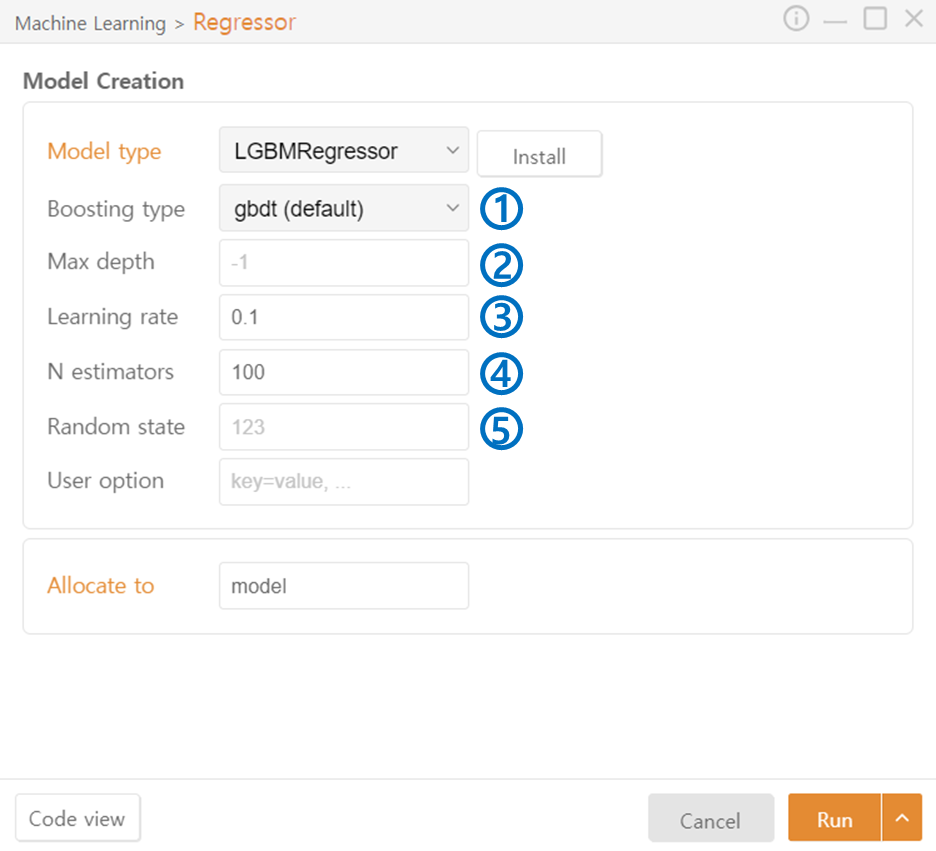

LGBM Regressor

Boosting type: Specifies the boosting type used in the algorithm.

Max depth: Specifies the maximum depth of the tree.

Learning Rate: Specifies the learning rate.

N estimators: Specifies the number of trees in the ensemble.

Random State: Sets the seed value for the random number generator used in model training.

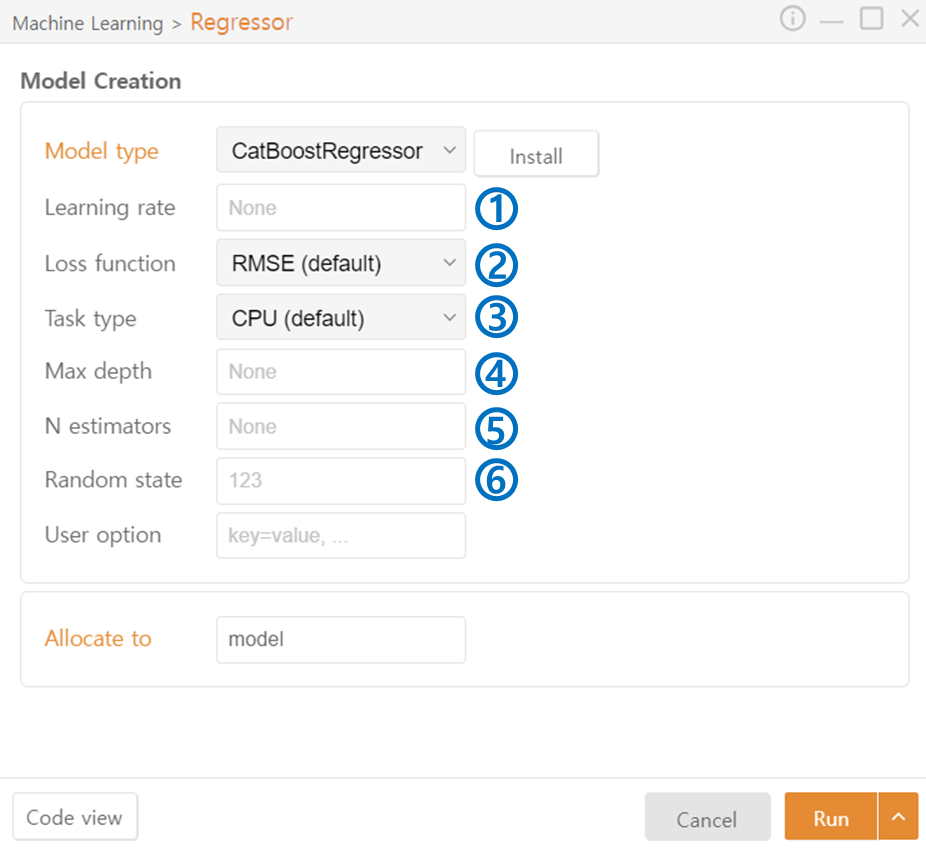

CatBoost Regressor

Learning rate: Specifies the learning rate.

Loss function: Specifies the loss function used.

Task Type: Specifies the hardware used for data processing.

Max Depth: Specifies the maximum depth of the tree.

N estimators: Specifies the number of trees in the ensemble.

Random State: Sets the seed value for the random number generator used in model training.

Last updated